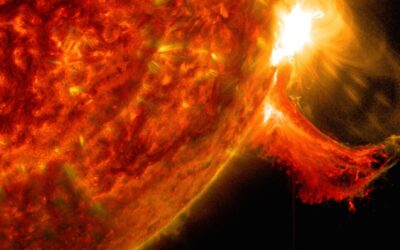

The tech industry is on a tear, building data centers for AI as quickly as they can buy up the land. The sky-high energy costs and logistical headaches of managing all those data centers have prompted interest in space-based infrastructure. Moguls like Jeff Bezos and Elon Musk have mused about putting GPUs in space, and now Google confirms it’s working on its own version of the technology. The company’s latest “moonshot” is known as Project Suncatcher, and if all goes as planned, Google hopes it will lead to scalable networks of orbiting TPUs. The space around Earth has changed a lot in the last few years. A new generation of satellite constellations like Starlink has shown it’s feasible to relay Internet communication via orbital systems. Deploying high-performance AI accelerators in space along similar lines would be a boon to the industry’s never-ending build-out. Google notes that space may be “the best place to scale AI compute.” Google’s vision for scalable orbiting data centers relies on solar-powered satellites with free-space optical links connecting the nodes into a distributed network. Naturally, there are numerous engineering challenges to solve before Project Suncatcher is real. As a reference, Google points to the long road from its first moonshot self-driving cars 15 years ago to the Waymo vehicles that are almost fully autonomous today. Taking AI to space Some of the benefits are obvious. Google’s vision for Suncatcher, as explained in a pre-print study (PDF), would place the satellites in a dawn-dusk sun-synchronous low-earth orbit. That ensures they would get almost constant sunlight exposure (hence the name). The cost of electricity on Earth is a problem for large data centers, and even moving them all to solar power wouldn’t get the job done. Google notes solar panels are up to eight times more efficient in orbit than they are on the surface of Earth. Lots of uninterrupted sunlight at higher efficiency means more power for data processing. A major sticking point is how you can keep satellites connected at high speeds as they orbit. On Earth, the nodes in a data center communicate via blazing-fast optical interconnect chips. Maintaining high-speed communication among the orbiting servers will require wireless solutions that can operate at tens of terabits per second. Early testing on Earth has demonstrated bidirectional speeds up to 1.6 Tbps—Google believes this can be scaled up over time.

Continue reading the complete article on the original source